7.8 Metacognition و 7.9

7.8 Metacognition

Throughout this chapter we have cited the importance of metacognition: thinking about ones’ own thinking and cognitive processes. Metacognition influences the decision-making process by guiding how people adapt to the particular decision situation. Here we highlight five of the most critical elements of metacognition for macrocognition.

1. Knowing what you don’t know. That is, being aware that your decision processes or those necessary to maintain adequate situation awareness are inadequate because of important cues that are missing, and, if obtained, could substantially improve situation awareness and assessment.

2. The decision to “purchase” further information. This can be seen as a decision within the decision. Purchasing may involve a financial cost, such as the cost of an additional medical test required to reduce uncertainty on a diagnosis. It also may involve a time cost, such as the added time required before declaring a hurricane evacuation, to obtain more reliable information regarding the forecast hurricane track. In these cases, metacognition is revealed in the ability to balance the costs of purchase against the value of the added information [476]. The metacognitive skills here also clearly involve keeping track of the passage of time in dynamic environments, to know when a decision may need to be executed even without full information.

3. Calibrating confidence in what you know. As we have described above, the phenomenon of overconfidence is frequently manifest in human cognition [351], and when one is overconfident in ones’ knowledge, there will be both a failure to seek additional information to reduce uncertainty, and also a failure to plan for contingencies if the decision maker is wrong in his/her situation assessment. 4. Choosing the decision strategy adaptively. As we have seen above, there are a variety of different decision strategies that can be chosen; using heuristics, holistic processing, System 1, recognition primed decisions, or deploying the more elaborate effort-demanding algorithms, analytic decision strategies using System 2. The expert has many of these in her toolkit, but metacognitive skills are necessary to decide which to employ when, as Amy did in our earlier example, by deciding to switch from an RPD pattern match, to a more time analytical strategy when the former failed. 5. Processing feedback to improve the toolkit. Element 4 relates to a single instance of a decision—in Amy’s case, the diagnosis and choice of treatment for one patient. However metacognition can and should also be employed to process the outcome of a series of decisions, realize from their negative outcomes that they may be wanting, and learning to change the rules by which different strategies are deployed, just as the student, performing poorly in a series of tests, may decide to alter his/her study habits. To deploy such metacognitive skills here obviously requires some effort to obtain and process the feedback of decision outcomes, something we saw was relatively challenging to do with decision making.

7.8.1 Principles for Improving Metacognition

As with other elements of macrocognition, metacognition can be improved by some combination of changing the person (through training or experience) or changing the task (through task and technology).

1. Ease information retrieval. Requiring people to manually retrieve or select information is more effortful than simply requiring them to scan to a different part of the visual field [138, 477], a characteristic that penalizes the concepts of multilevel menus and decluttering tools that require people to select the level of decluttering they want. Pop-up messages and other automation features that infer and satisfy a person’s information needs and relieve the effort of accessing information [478].

2. Highlight benefits and minimize effort of engaging decision aids. Designers must understand the effort costs generated by potentially powerful features in interfaces. Such costs may be expressed in terms of the cognitive effort required to learn the feature or the mental and physical effort and time cost required to load or program the feature. Many people are disinclined to invest such effort even if the anticipated gains in productivity are high, and so the feature will go unused.

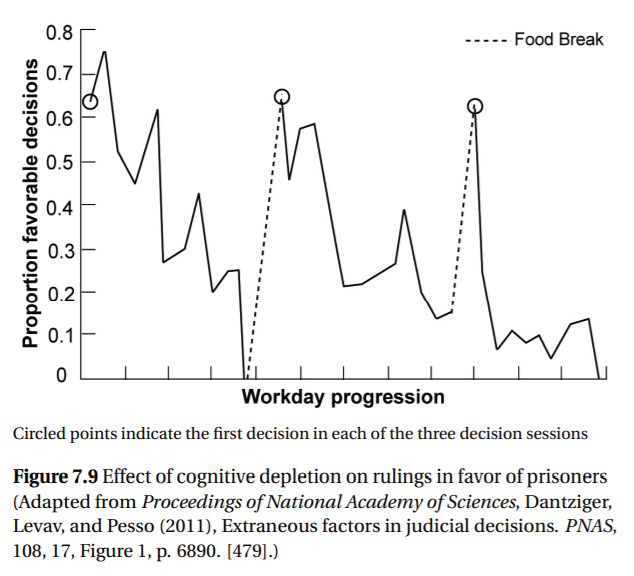

3. Manage cognitive depletion. An extended series of demanding decisions can incline people towards an intuitive approach to decisions, even when an analytic one would be more effective. Coaching people on this tendency might help them take rest breaks, plan complicated decisions early rather than late in the day, and avoid systems that introduce unnecessary decisions. People tend to make the easy or default decision as they become fatigued. As an example, Figure 7.9 shows how cognitive depletion changes the ruling of Israeli judges making parole decisions [479]. The timeline starts at the beginning of the day and each open circle represents the first decision after a break. The pattern cannot be explained by obvious confounding factors such as the gravity of the offense or time served. Similar effects are seen in other domains such as physicians choosing to prescribe more antibiotics as they become cognitively depleted over the day [480].

4. Training metacognition. Training can improve metacognition by teaching people to: (1) consider cues needed to develop situation awareness, (2) check situation assessments or explanations for completeness and consistency with cues, (3) analyze data that conflict with the situation assessment, and (4) recognize when too much conflict exists between the assessment and the cues. Training metacognition also needs to consider when it is appropriate to rely on the automation and when it is not [435].

Figure 7.9 Effect of cognitive depletion on rulings in favor of prisoners (Adapted from Proceedings of National Academy of Sciences, Dantziger, Levav, and Pesso (2011), Extraneous factors in judicial decisions. PNAS, 108, 17, Figure 1, p. 6890. [479].)

7.9 Summary

We discussed decision making and the factors that make it more and less effective. Normative mathematical models of utility theory describe how people should compare alternatives and make the “best” decision. However, limited cognitive resources, time pressure, and unpredictable changes often make this approach unworkable, and people use simplifying heuristics, which make decisions easier but also lead to systematic biases. In many situations people often have years of experience that enables them to refine their decision heuristics and avoid many biases. Decision makers also adapt their decision making by moving from skill- and rule-based decisions to knowledge-based decisions according to the degree of risk, time pressure, and experience. This adaptive process must be considered when improving decision making through task redesign, choice architecture, decision-support systems, or training.

Techniques to shape decision making discussed in this chapter offer surprisingly powerful ways to affect decisions and so the ethical dimensions of these choices should be carefully considered. As an example, should the default setting be designed to provide people with the option that aligns with their preference, what is best for them, what is likely to maximize profits, or what might be best for society [18]? The concepts in this chapter have important implications for safety and human error, discussed in Chapter 16. In many ways the decision-support systems described in this chapter can be considered as displays or automation—Chapter 11 addresses automation, and we turn to displays in the next chapter.