ادامه 7.3

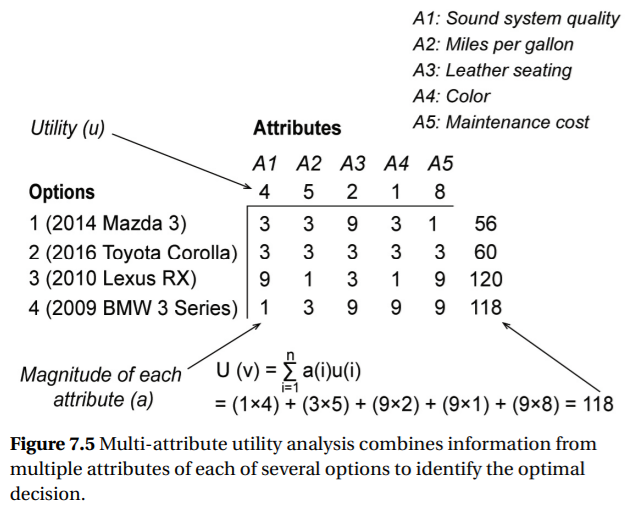

Figure 7.5 shows the analysis of four different options, where the options are different cars that a student might purchase. Each car is described by five attributes. These attributes might include the sound quality of the stereo, fuel economy, insurance costs, and maintenance costs. The utility of each attribute reflects its importance to the student. For example, the student cannot afford frequent and expensive repairs, so the utility or importance of the fifth attribute (maintenance costs) is quite high (8), whereas the student does not care as much about the sound quality of the stereo (4) or the fourth attribute (color), which is quite low (1). The cells in the decision table show the magnitude of each attribute for each option. For this example, higher values reflect a more desirable situation. For example, the third car has a poor stereo, but low maintenance costs. In contrast, the first car has a slightly better stereo, but high maintenance costs. Combining the magnitude of all the attributes shows that third car (option 3) is most appealing or “optimal” choice and that the first car (option 1) is least appealing.

Figure 7.5 Multi-attribute utility analysis combines information from multiple attributes of each of several options to identify the optimal decision.

Multi-attribute utility theory, shown in Figure 7.5, assumes that all outcomes are certain. However, life is uncertain, and probabilities often define the likelihood of various outcomes (e.g., you cannot predict maintenance costs precisely). Another example of a normative model is expected value theory, which addresses uncertainty. This theory replaces the concept of utility in the previous context with that of expected value. The theory applies to any decision that involves a “gamble” type of decision, where each choice has one or more outcomes and each outcome has a worth and a probability. For example, a person might be offered a choice between: 1. Winning $50 with a probability of 1.0 (a guaranteed win), or 2. Winning $200 with a probability of 0.30. Expected value theory assumes that the overall value of a choice (Equation 7.1) is the sum of the worth of each outcome multiplied by its probability where E(v) is the expected value of the choice, p(i) is the probability of the ith outcome, and v(i) is the value of the i th outcome.

E(v)= به کتاب مراجعه شود

The expected value of the first choice for the example is $50×1.0, or $50, meaning a certain win of $50. The expected value of the second choice is $200 × 0.30, or $60, meaning that if the choice were selected many times, one would expect an average gain of $60, which is a higher expected value than $50. Therefore, the normative decision maker should always choose the second gamble. In reality, people tend to avoid risk and go with the sure thing [372].

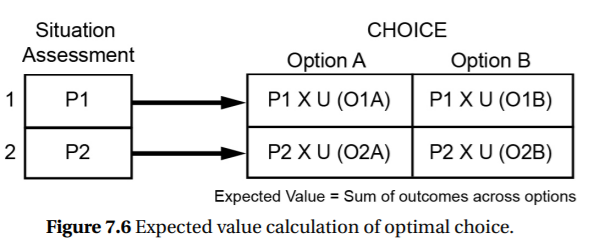

Figure 7.6 shows two states of the world, 1 and 2, which are generated from situation assessment. Each has a probability, P1 and P2, respectively. The two choice options, A and B, may have four different outcomes as shown in the four cells to the right. Each option may also have different Utilities (U), which could be positive or negative, contingent upon the existing state of the world. The normative view of decision making dictates that the chosen option should be the one with the highest (most positive) sum of the products within the two different states. Descriptive decision making accounts for how people actually make decisions. People can depart from the optimum, normative, expected utility model. First, people do not always try to maximize, EV nor should they because other decision criteria beyond expected value can be more important. Second, people often shortcut the time and effort-consuming steps of the normative approach. They do this because time and resources are not adequate to “do things right” according to the normative model, or because they have expertise that directly points them to the right decision. Third, these shortcuts sometimes result in errors and poor decisions. Each of these represents an increasingly large departure from normative decision making.

As an example of using a decision criterion different from maximizing expected utility, people may choose instead to minimize the possibility of suffering the maximum loss. This certainly could be considered as rational, particularly if one’s resources to deal with the loss were limited. This explains why people purchase insurance; even though such a purchase decision does not maximize their expected gain. If it did, the insurance companies would soon be out of business! The importance of using different decision criteria reflects the mismatch between the simplifying assumptions of expected utility and the reality of actual situations. Not many people have the ability to absorb a $100,000 medical bill that might accompany a severe health problem. Most decisions involve shortcuts relative to the normative approach. Simon [373] argued that people do not usually follow a goal of making the absolutely best or optimal decision. Instead, they opt for a choice that is “good enough” for their purposes, something satisfactory. This shortcut method of decision making is termed satisficing. In satisficing, the decision maker generates and evaluates choices only until one is found that is acceptable rather thanone that is optimal. Going beyond this choice to identify something that is better is not worth the effort. Satisficing is a very reasonable approach given that people have limited cognitive capacities and limited time. Indeed, if minimizing the time (or effort) to make a decision is itself considered to be an attribute of the decision process, then satisficing or other shortcutting heuristics can sometimes be said to be optimal—for example, when a decision must be made before a deadline, or all is lost. In the case of our car choice example, a satisficing would be to take the first car that gets the job done rather than doing the laborious comparisons to find the best. Satisficing and other shortcuts are often quite effective [366], but they can also lead to biases and poor decisions as we will discuss below. Our third characteristic of descriptive decision making concerns human limits that contribute to decision errors. A general source of errors concerns the failure of people to recognize when shortcuts are inappropriate for the situation and adopt the more laborious decision processed. Because this area is so important, and its analysis generates a number of design solutions, we dedicate the next section to this topic.