7.6 Problem Solving and Troubleshooting

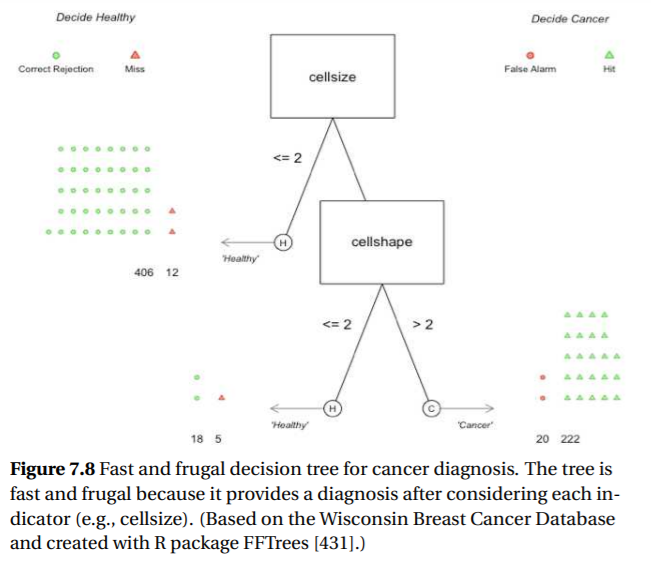

Many of the decision tasks studied in human factors require diagnosis, which is the process of inferring the underlying or “true” state of a system. Examples of inferential diagnosis include medical diagnosis, fault diagnosis of a mechanical or electrical system, inference of weather conditions based on measurement values or displays, and so on. Sometimes this diagnosis is of the current state, and sometimes it is of the predicted or forecast state, such as in weather forecasting or economic projections. The cognitive processes of problem solving and troubleshooting are often closely linked because they have so many overlapping elements. Both start with a difference between an initial “state” and a final “goal state” and typically require a number of cognitive operations to reach the latter. The identity of those operations is often not immediately apparent to the human engaged in problemsolving behavior. Troubleshooting is often embedded within problem solving in that it is sometimes necessary to understand the identity of a problem before solving it. Thus, we may need to understand why our car engine does not start (troubleshoot) before trying to implement a solution (problem solving). Although troubleshooting may often be a step within a problem-solving sequence, problem solving may occur without troubleshooting if the problem is solved through trial and error or if a solution is accidentally encountered through serendipity. While both problem solving and troubleshooting involve attaining a state of knowledge, both also typically involve performance of specific actions. Thus, troubleshooting usually requires a series of tests whose outcomes are used to diagnose the problem, whereas problem solving usually involves actions to implement the solution. Both are considered to be iterative processes of perceptual, cognitive, and response-related activities. Both problem solving and troubleshooting impose heavy cognitive demands, which limits human performance [461, 462]. Many of these limits are manifest in the heuristics and biases discussed earlier in the chapter, in the context of decision making. In troubleshooting, for example, people usually maintain no more than two or three active hypotheses in working memory as to the possible source of a problem [463]. More than this number overloads the limited capacity of working memory, since each hypothesis is complex enough to form more than a single chunk. Furthermore, when testing hypotheses, there is a tendency to focus on only one hypothesis at a time to confirm it or reject it. Thus, in troubleshooting our car we will probably assume one problem and perform tests to confirm that it is the problem. Naturally, troubleshooting success depends on attending to the appropriate cues and test outcomes. This dependency makes troubleshooting susceptible to attention and perceptual biases. The operator may attend selectively to very salient outcomes (bottom-up processing) or to outcomes that are anticipated (top-down processing). As we consider the first of these potential biases, it is important to realize that the least salient stimulus or event is the nonevent. People do not easily notice the absence of something [433]. Yet the absence of a symptom can often be a very valuable and diagnostic tool in troubleshooting to eliminate faulty hypotheses of what might be wrong. For example, the fact that a particular warning light might not be on could eliminate from consideration a number of competing hypotheses.

7.6.1 Principles for Improving Problem Solving and Troubleshooting

The systematic errors associated with troubleshooting suggest several design principles. 1. Present alternate hypotheses. An important bias in troubleshooting, resulting from top-down or expectancy-driven processing, is often referred to as cognitive tunneling, or confirmation bias [464, 407]. In troubleshooting, this is the tendency to stay fixated on a particular hypothesis (that chosen for testing), look for cues to confirm it (top-down expectancy guiding attention allocation), and interpret ambiguous evidence as supportive (top-down expectancy guiding perception). In problem solving, the corresponding phenomenon is to become fixated on a particular solution and stay with it even when it appears not to be working. Decision aids can challenge the persons’ hypothesis and highlight disconfirming evidence. 2. Create displays that can act as an external mental model. These cognitive biases are more likely to manifest when two features characterize the system under investigation. First, high system complexity (the number of system components and their degree of coupling or links) makes troubleshooting more difficult [465]. Complex systems are more likely to produce incorrect or “buggy” mental models [466], which can hinder the selection of appropriate tests or correct interpretation of test outcomes. Second, intermittent failures of a given system component turn out to be particularly difficult to troubleshoot [462]. A display that shows the underlying system structure, such as flow through the network of pipes in a refinery, can remove the burden of remembering that information. 3. Create systems that encourage alternate hypotheses. People generate a limited number of hypotheses because of working memory limitations [390]. Thus, people will bring in somewhere between one and four hypotheses for evaluation. Because of this people often fail to consider all relevant hypotheses [351]. Under time stress, decision makers often consider only a single hypothesis [467]. This process degrades the quality of novice decision makers far more than expert decision makers. The first option considered by experts is likely to be reasonable, but not for novices. Systems that make it easy for people to suggest many alternate hypothesis make it more likely a complete set of hypotheses will be considered.